Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Blog Article

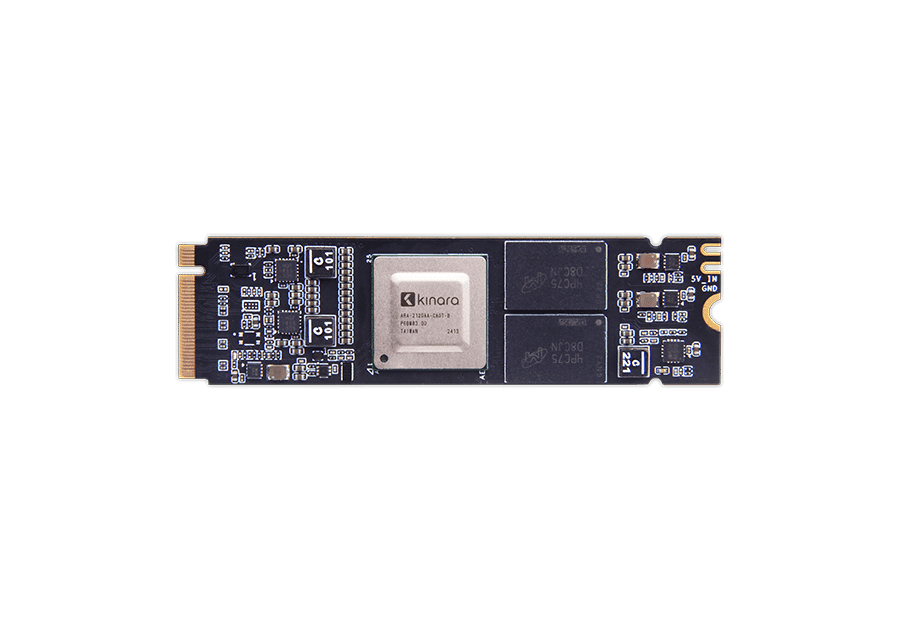

Geniatech M.2 AI Accelerator Module: Compact Power for Real-Time Edge AI

Synthetic intelligence (AI) continues to revolutionize how industries work, particularly at the edge, wherever quick control and real-time insights are not just desired but critical. The m.2 accelerator has appeared as a compact however powerful alternative for approaching the requirements of edge AI applications. Giving powerful performance inside a small presence, this component is rapidly driving creativity in everything from wise towns to professional automation.

The Requirement for Real-Time Control at the Edge

Side AI bridges the hole between people, products, and the cloud by enabling real-time data control wherever it's most needed. Whether powering autonomous vehicles, wise security cameras, or IoT sensors, decision-making at the edge should arise in microseconds. Traditional computing methods have confronted problems in maintaining these demands.

Enter the M.2 AI Accelerator Module. By integrating high-performance machine learning capabilities right into a lightweight sort factor, that tech is reshaping what real-time running seems like. It provides the rate and performance organizations need without depending solely on cloud infrastructures that could introduce latency and increase costs.

What Makes the M.2 AI Accelerator Element Stand Out?

• Small Design

Among the standout functions of the AI accelerator component is their small M.2 variety factor. It fits quickly into a variety of embedded techniques, servers, or edge units without the need for considerable hardware modifications. That makes arrangement simpler and much more space-efficient than bigger alternatives.

• Large Throughput for Equipment Understanding Tasks

Equipped with advanced neural system running functions, the element produces impressive throughput for projects like image acceptance, video examination, and presentation processing. The architecture assures seamless managing of complex ML designs in real-time.

• Energy Efficient

Power usage is just a significant issue for edge devices, specially those who operate in distant or power-sensitive environments. The element is improved for performance-per-watt while maintaining regular and trusted workloads, rendering it ideal for battery-operated or low-power systems.

• Adaptable Applications

From healthcare and logistics to wise retail and production automation, the M.2 AI Accelerator Module is redefining opportunities across industries. For instance, it powers sophisticated video analytics for wise security or helps predictive maintenance by considering sensor data in industrial settings.

Why Edge AI is Increasing Momentum

The rise of edge AI is reinforced by rising knowledge volumes and an increasing quantity of related devices. In accordance with new industry results, you can find around 14 billion IoT units running internationally, a number expected to exceed 25 billion by 2030. With this specific change, old-fashioned cloud-dependent AI architectures experience bottlenecks like increased latency and solitude concerns.

Edge AI reduces these issues by handling data locally, giving near-instantaneous ideas while safeguarding individual privacy. The M.2 AI Accelerator Module aligns perfectly with this specific tendency, enabling companies to harness the full potential of edge intelligence without reducing on detailed efficiency.

Critical Statistics Highlighting its Impact

To understand the affect of such systems, consider these features from new market reports:

• Development in Side AI Industry: The international side AI hardware market is predicted to grow at a compound annual development rate (CAGR) exceeding 20% by 2028. Units just like the M.2 AI Accelerator Module are vital for driving this growth.

• Performance Benchmarks: Laboratories testing AI accelerator segments in real-world cases have shown up to a 40% development in real-time inferencing workloads in comparison to conventional side processors.

• Usage Across Industries: Around 50% of enterprises deploying IoT products are likely to include side AI programs by 2025 to boost functional efficiency.

With such numbers underscoring their relevance, the M.2 AI Accelerator Element appears to be not really a instrument but a game-changer in the change to smarter, faster, and more scalable side AI solutions.

Groundbreaking AI at the Edge

The M.2 AI Accelerator Module shows more than still another piece of equipment; it's an enabler of next-gen innovation. Organizations adopting that technology can stay prior to the contour in deploying agile, real-time AI programs fully improved for side environments. Small however powerful, it's the ideal encapsulation of progress in the AI revolution.

From its ability to method machine understanding designs on the travel to its unmatched mobility and power effectiveness, this element is proving that edge AI is not a remote dream. It's occurring today, and with tools like this, it's easier than actually to create smarter, quicker AI closer to where the action happens. Report this page